OpenAI, the cutting-edge AI company behind revolutionary AI models such as DALL-E and ChatGPT, has recently launched a new free tool that aims to differentiate between human-written and AI-generated text. However, the company warns that this classifier is not a perfect solution and should not be relied on as the sole decision-making tool. The classifier can assist in determining whether text has been written by an AI or a human, but its results should not be treated as definitive.

Using the classifier is a straightforward process, requiring a free OpenAI account and access to the tool. Simply paste the text in question into a designated box, click the button, and the tool will indicate whether it believes the text is “very unlikely,” “unlikely,” “unclear,” “possibly,” or “likely AI-generated.” To train the model that powers the tool, OpenAI used a dataset of paired human-written and AI-generated text on a range of topics.

Despite its simplicity, there are some limitations and warnings to consider when using the classifier. OpenAI requires a minimum of 1000 characters or 150-250 words for the tool to provide accurate results. Furthermore, the classifier is not always reliable, as it has the potential to mislabel both AI-generated and human-written text. Additionally, AI-generated text can be easily edited to evade the classifier, making it less effective in certain circumstances. The tool also struggles with text written by children and in languages other than English, as it was primarily trained on English content written by adults.

OpenAI is aware of the limitations of its classifier and provides warnings to users. The company acknowledges that the tool can sometimes “incorrectly but confidently” label human-written text as AI-generated, particularly if the text deviates significantly from the training data. OpenAI stresses that the classifier is still a “work-in-progress” and that it is constantly working to improve its accuracy.

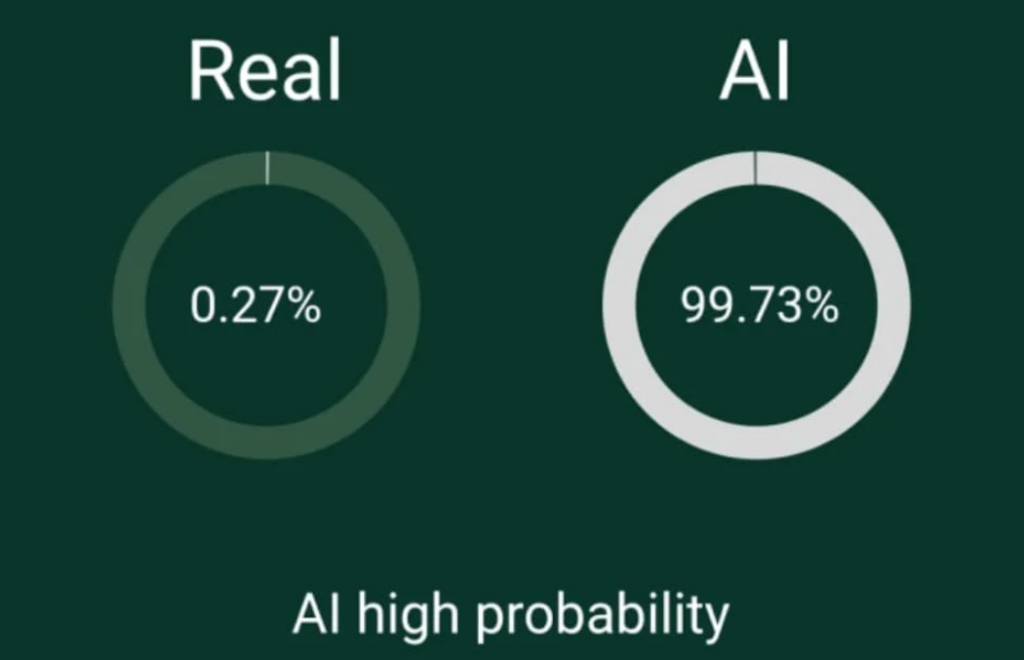

Despite these limitations, OpenAI’s classifier has demonstrated some promising results. For example, when tested on ChatGPT responses that have been posted online, the tool has accurately marked the majority as “possibly” or “likely AI-generated.” Additionally, in its internal tests, the tool correctly identified AI-generated text as “likely AI-generated” 26% of the time and only falsely identified human-written text as AI-generated 9% of the time, outperforming its previous AI-detection tool.

OpenAI is not the first to introduce a tool for detecting AI-generated text. Similar tools, such as GPTZero, have been available since the rise of ChatGPT. However, OpenAI is specifically targeting the educational sector with its tool. The company is actively engaging with educators in the US to understand how ChatGPT is being used in the classroom and seeking feedback from those involved in education. The identification of AI-generated text is a hot topic in education, with some schools embracing the technology and others banning it outright. With its classifier, OpenAI is hoping to provide educators with a reliable tool to help them determine whether text has been written by an AI or a human.